Ogg-Vorbis format, 52Mbytes Ogg-Vorbis format, 52Mbytes

|

WAV format, 290Mbytes WAV format, 290Mbytes

|

Ogg-Vorbis format, 52Mbytes Ogg-Vorbis format, 52Mbytes

|

WAV format, 290Mbytes WAV format, 290Mbytes

|

i = i + 1 or i++

involves both a read and a write

main() method

Thread:

class MyThread extends Thread {

public void run() {

// code for my thread

}

}

MyThread thr = new MyThread();

thr.start();Runnable:

class MyRun implements Runnable {

public void run() {

// code for my thread

}

}

MyRun run = new MyRunnable();

new Thread(run).start();

while (msg = getMessage())

handle msg

while (msg = getMessage())

create new thread to handle msg

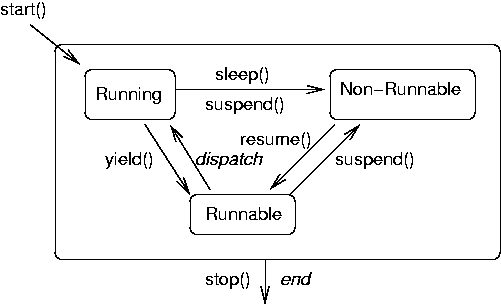

yield() it was still running

"correctly" after 100,000 transactions (but would probably break

sometime)

synchronized keyword

synchronized void writeLogEntry() {...}

...

synchronized(anObject) {

...

}

...

yield() does not fix the problem

transfer() and test()

methods does

public synchronized void test() {...}

public synchronized void transfer(int from, int to, int amount) {...}test() is redundant since it is only

called from transfer(), but it does no harm

this

SynchBankTest, the synchronizing object is the

Bank object b created by main()

transfer() could have been written

public void transfer(int from, int to, int amount)

{ synchronized(this) {

if (accounts[from] < amount) return;

accounts[from] -= amount;

accounts[to] += amount;

ntransacts++;

if (ntransacts % NTEST == 0) test();

}

}

Bank object to synchronize on

Adder

objects and then uses a third shared object synchObject

to avoid contention

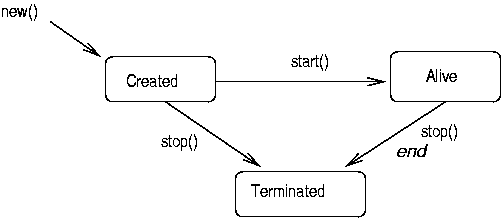

There are a number of ways in which threads can control execution

Thread.sleep(milliseconds)

Thread.yield() allows the scheduler to select

another runnable thread

wait() block until woken up by a call to

notify

notify() and notifyAll()

wakeup threads waiting to regain control of the monitor's lock

wait()/notifyAll() occur in pairs, associated

with a synchronizing object

notifyAll() is preferred to notify()

wait() and notifyAll() can

usually only be called within a synchronized block or

method

synchronized(obj) {

...

obj.wait();

...

}

Two processes wish to communicate with each other, in a 2-way manner. Two pipes

can be used for this:

When the "give way to the right" rule was in force, this was a common situation:

A resource allocation graph is a graph showing processes, resources and the

connections between them. Processes are modelled by circles, resources by squares.

If a process controls a resource then there is an arrow from resource to process.

If a process requests a resource an arrow is shown the other way.

For example, P1 is using R1 and has requested R2, while P2 is using R2.

Deadlock can now occur if P2 requests R1 - setting up a cycle.

The total set of conditions for deadlock to occur are:

transfer() method synchronized locks all accounts

for a transfer between two of them

public void transfer(int from, int to, int amount) {

if (accounts[from] < amount) return;

synchronized(accounts[from]) {

synchronized(accounts[to]) {

accounts[from] -= amount;

accounts[to] += amount;

ntransacts++;

if (ntransacts % NTEST == 0) test();

}

}

}To prevent deadlocks from occurring, one of the four conditions must be disallowed.

Process must release all current resources before requesting more. This is not feasible.

public void transfer(int from, int to, int amount) {

if (accounts[from] < amount) return;

int high, low;

if (from > to) {

high = from;

low = to;

} else {

high = to;

low = from;

}

synchronized(accounts[high]) {

synchronized(accounts[low]) {

accounts[from] -= amount;

accounts[to] += amount;

ntransacts++;

if (ntransacts % NTEST == 0) test();

}

}

}Deadlock prevention is to ensure that deadlocks never occur. Deadlock avoidance is attempting to ensure that resources are never allocated in a way that might cause deadlock.

There are situations that may give rise to cycles, but in practise will not lead to deadlock. For example, P1 and P2 both want R1 and R2, but in this manner:

P1 can be given R1 and P2 can be given R2. P1 will require R2 before it can give up R1, but that is ok - P2 will give up R2 before it needs R1.

The Banker's algorithm can be used to ensure this, but it is too complicated for general use.

Listing all the files in a directory is a recursive operation:

Each directory could be handled by a separate thread. When a list of files is found for each directory, it's name is printed if it is an ordinary file, but if it is a directory then a new thread is created to handle it.

Listing files can be made more complex by finding their size and summing them.

This one is a lot more complicated!

sum variable must be shared between all threads,

so it needs to be static

sum must be synchronised

SumFilesThread object created

Thread.activeCount().

But this is an estimate only, so is not reliable

threadCount

run() is called,

because there may be a gap between creation and

running)

run() finishes, decrement the count

Thread

class